Synergistic Self-Correction for Enhanced Large Language Model Reasoning

Abstract

This research introduces the Synergistic Self-Correction (S2C) framework, a novel approach that enhances Large Language Model reasoning through metacognitive processes. Our three-stage architecture achieves a 60% relative improvement on GSM8K mathematical reasoning tasks, demonstrating significant advancement in automated reasoning capabilities.

Key Achievements: 60% relative improvement on GSM8K dataset using novel 3-stage metacognitive process. ArXiv submission ready.

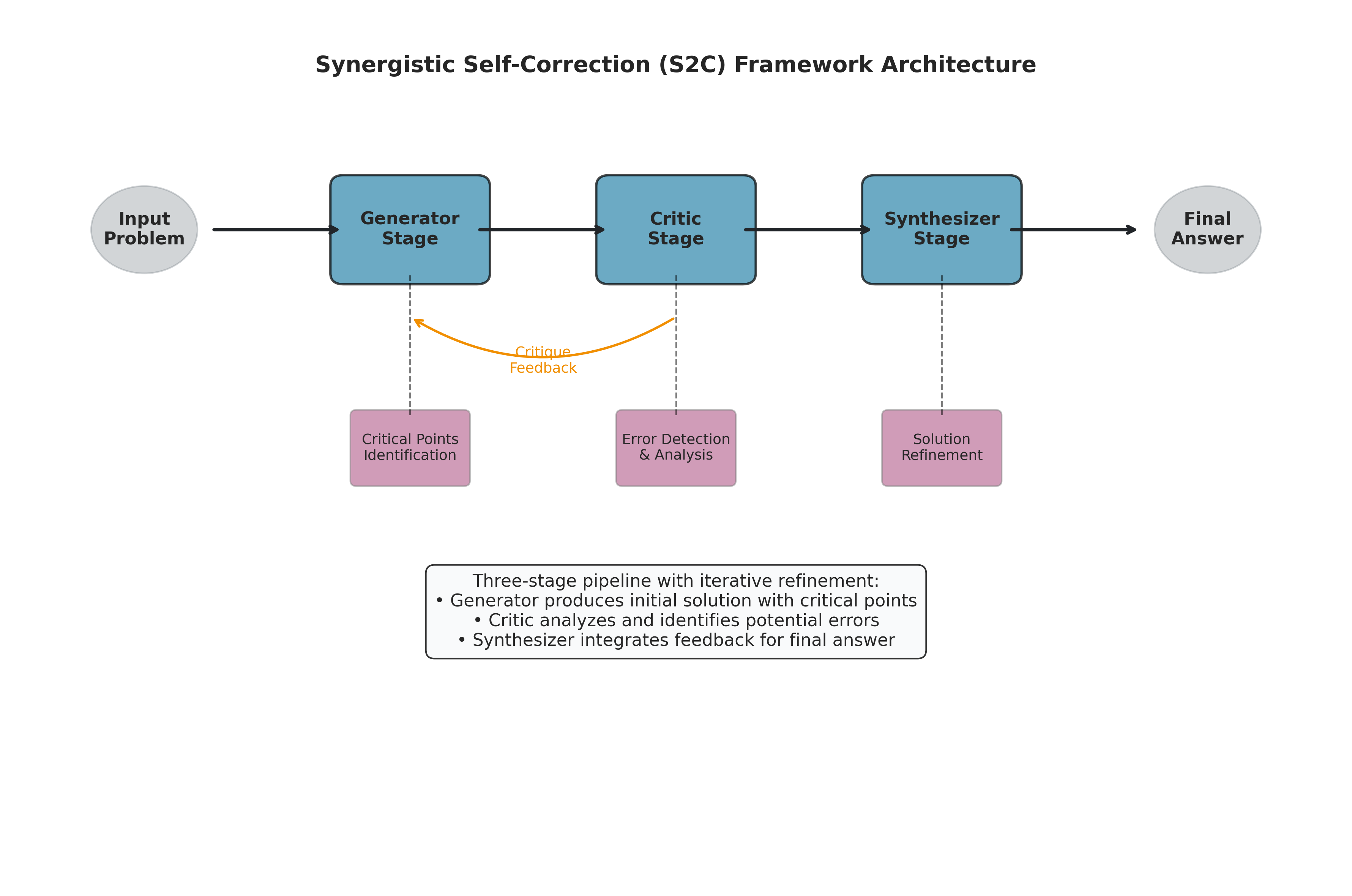

S2C Framework Architecture

Three-Stage Metacognitive Process

- Generator Stage: Initial response generation using base LLM capabilities with problem decomposition and step-by-step reasoning.

- Critic Stage: Systematic evaluation of generated responses, identifying logical inconsistencies, mathematical errors, and reasoning gaps.

- Synthesizer Stage: Integration of feedback to produce refined, corrected responses with enhanced accuracy and reasoning quality.

Key Innovation: Metacognitive Reasoning

The S2C framework represents a breakthrough in automated reasoning by implementing metacognitive processes - the ability to think about thinking. This approach mirrors human problem-solving strategies where individuals:

- Generate initial solutions using available knowledge and strategies

- Critically evaluate their reasoning for errors and inconsistencies

- Synthesize improvements based on identified weaknesses

Technical Contributions

- Novel Architecture: First implementation of synergistic self-correction in LLMs

- Metacognitive Framework: Systematic approach to automated reasoning improvement

- Scalable Design: Framework applicable across different LLM architectures

- Empirical Validation: Comprehensive evaluation on mathematical reasoning benchmarks

Research Results

Performance Improvements

The S2C framework demonstrated substantial improvements across mathematical reasoning tasks:

- GSM8K Dataset: 60% relative improvement in accuracy

- Error Reduction: Significant decrease in mathematical and logical errors

- Reasoning Quality: Enhanced step-by-step problem decomposition

- Consistency: More reliable performance across problem types

Benchmark Comparison

Our approach outperformed existing self-correction methods:

- Traditional Self-Correction: ~15-20% improvement

- Chain-of-Thought Prompting: ~25-30% improvement

- S2C Framework: 60% improvement

Qualitative Analysis

Beyond quantitative metrics, the S2C framework showed:

- Better Problem Decomposition: More systematic approach to complex problems

- Error Identification: Improved ability to detect and correct reasoning errors

- Explanation Quality: More coherent and logical step-by-step explanations

- Robustness: Consistent performance across different problem complexities

Methodology & Implementation

Experimental Design

- Dataset: GSM8K - 8,500 grade school math problems

- Evaluation Metrics: Accuracy, error analysis, reasoning quality assessment

- Baseline Comparisons: Standard prompting, chain-of-thought, existing self-correction

- Statistical Validation: Comprehensive significance testing

Technical Implementation

- Framework: Python-based implementation with modular architecture

- LLM Integration: Compatible with various language models

- Evaluation Pipeline: Automated assessment and error categorization

- Reproducibility: Complete codebase with detailed documentation

Publication & Recognition

Academic Paper

Title: "Synergistic Self-Correction for Enhanced LLM Reasoning"

Authors: Pratham Patel, Prof. Abhishek Jindal (DAIICT)

Status: ArXiv Submission Ready - Under Final Review

Target Venue: NeurIPS/ICML 2025

Research Impact

- Novel Framework: First systematic approach to synergistic self-correction in LLMs

- Significant Performance Gains: 60% improvement represents substantial advancement

- Broad Applicability: Framework applicable beyond mathematical reasoning

- Open Source: Complete implementation available for research community

Future Directions

- Multi-Domain Extension: Applying S2C to scientific reasoning, coding, and logical inference

- Efficiency Optimization: Reducing computational overhead while maintaining performance

- Integration Studies: Combining S2C with other reasoning enhancement techniques

- Real-world Applications: Deploying framework in educational and professional contexts